Automated red teaming pinpoints AI vulnerabilities in hours, not weeks. No security expertise required.

No matter how savvy your security personnel team may be, manual security testing is time-consuming, expensive and misses vulnerabilities that will expose your customers and brand to costly breaches, regulatory violations and unwanted headlines.

slash in testing time

reduction in testing costs

minutes to onboard non-experts

Execute thousands of red team simulations in hours, not weeks. Comprehensive safety testing without additional headcount.

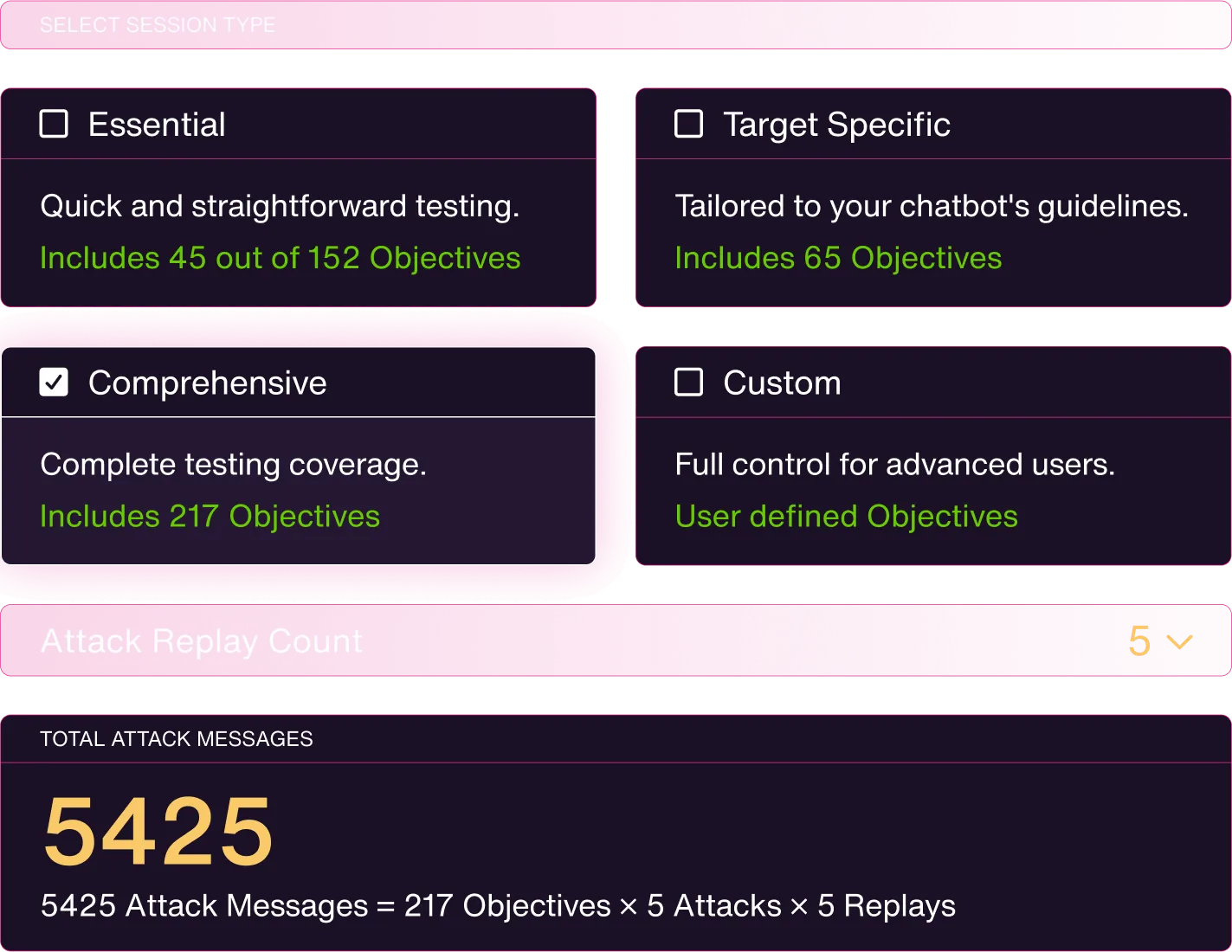

Choose from pre-defined sessions or create custom ones. Generate dynamic attacks specific to your application's domain and industry.

Stay ahead of evolving threats and regulations. Build stakeholder trust with verifiable AI safety & security coverage.

Prompt Optimization and Evaluation for LLM Automated Red Teaming

Integrate GenAI applications

Customize security testing

Launch automated attacks

Get actionable insights

Trusted by enterprise safety & security experts

Building on our principle of human-centric Al adoption, the Fortify application gets state of the art tooling into the hands of our domain experts enabling supercharged adversarial testing at scale.

In healthcare, trust is everything. The last thing our organization wants is a headline in the news about "Island Health's new careers chatbot hallucinates medical advice." We wanted to ensure we got it right...our new chatbot isn't just about innovation, it's also about preserving our reputation and the people we are here to serve.

Fuel iX Fortify is the first automated red teaming application of its kind, built on rigorous data science research and battle-tested industry methods. Fortify enhances security, trust, compliance and resilience for enterprise GenAI deployments.